What is LLM

Model Definition

Large language models (LLMs) like ChatGPT are taking the tech world today. From Wikipedia, the definition of LLM is:

in other words:

LLMs are trained on vast datasets of texts like books, websites or user generated contents. They can generate new text that continues an initial prompt in a natural way.

LLM model is basically a neural network with a lot of parameters. Roughly speaking, the more parameters, the better the model is. So we always hear about the size of the model, which is the number of parameters. For example, GPT-3 has 175 billion parameters, and GPT-4 may have over 1 trillion parameters.

But what exactly a model looks like?

Model is just a file

A language model is just a binary file:

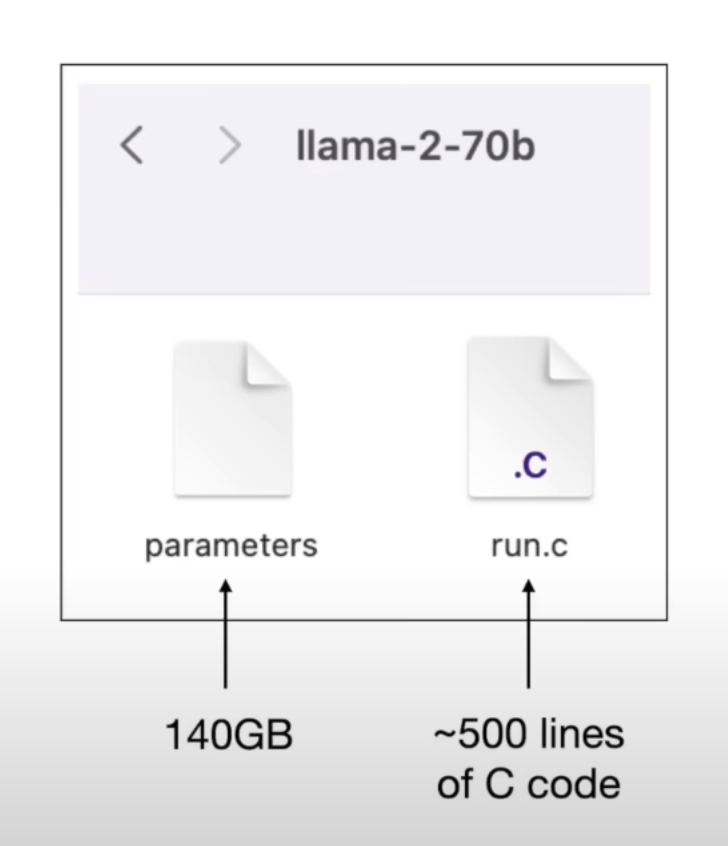

(from Andrej Karpathy's build a GPT model from scratch))

In above image, the parameters file is Meta's Llama-2-70b model, and its size is 140GB which contains 70b parameters (in a format of digits). The run.c file is the inference program, which is used to query the model.

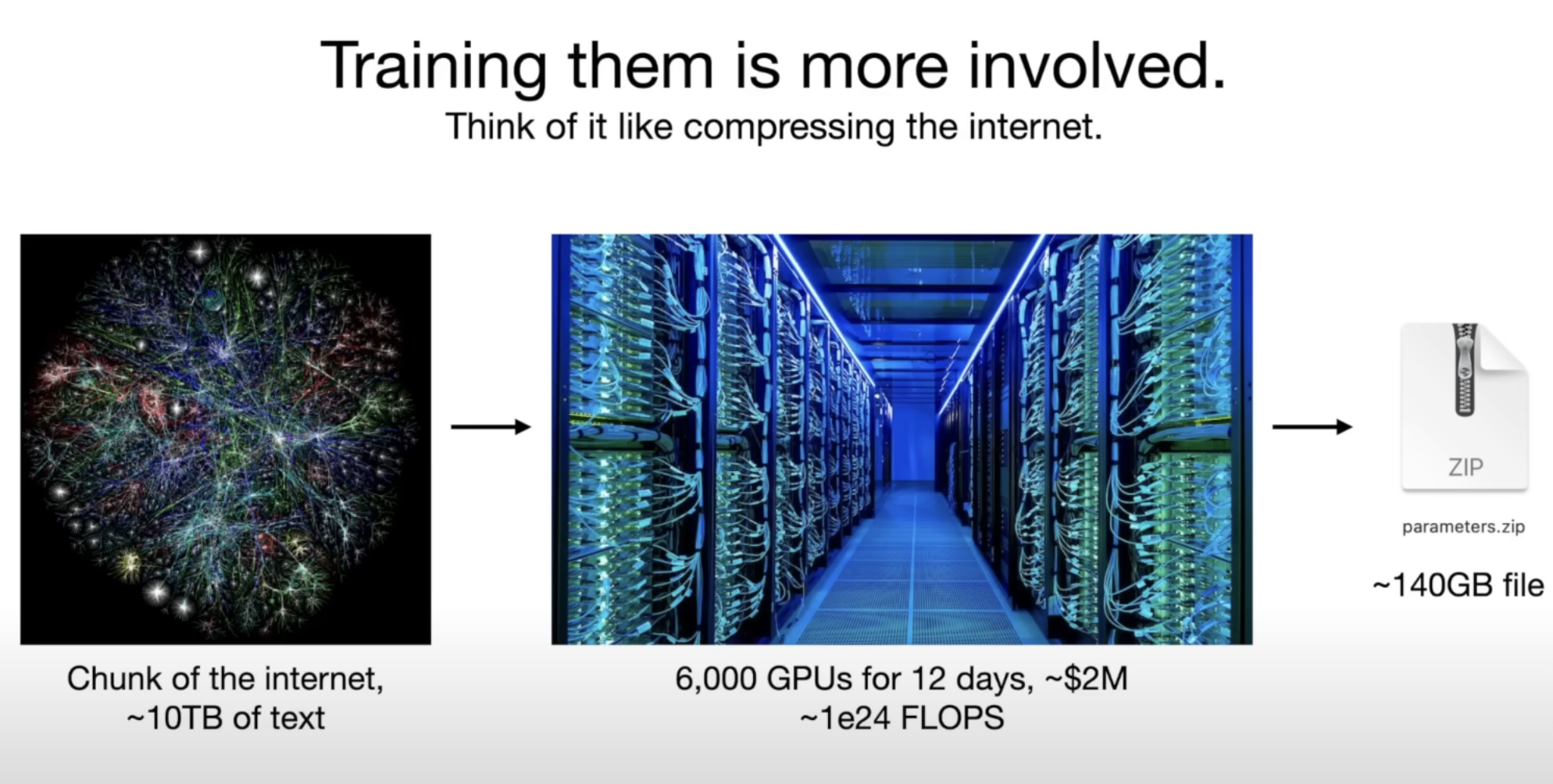

Training these super large models are very expensive. It costs millions of dollars to train a model like GPT-3.

(from Andrej Karpathy's build a GPT model from scratch))

As of today, the most outstanding model GPT-4 is no longer a single model, but a mixture of several models. Each model is trained or fine-tuned on a specific domain and work together to bring the best perform during inference.

But don't worry, our goal is to understand and learn about the fundamentals of large language models. Fortunately, we can still train a model (with much fewer parameters) on our personal computers. We will dive in and code it together, step-by-step, in the Let's Code LLM section soon.

Model Inference

When the model is trained and ready, a user queries the model with a question, the question text is passed into that 140GB file and processed character-by-character then return the most relevant text as result outputs.

By meaning the most relevant, it means the model will return the text that are most likely to be the next character of the input text.

For example,

apple is returned as the next character, because "apple" is the most likely next character of "I like to eat" - based on the large datasets which the model was trained on.

Remember we mentioned books, websites above? Base on the chunk of data we provide, you can think of this way:

I like to eat apple is a very common sentence that the model learned for multiple times.

I like to eat banana is also a common sentence but less common than the one above.

So during training, the model:

So these probabilities result in probability sets saved in the parameters model file. (Probabilities usually called Weights in machine learning field.)

So basically, an LLM model is a probabilistic database that assigns a probability distribution to any given character and its relevant contextual characters.

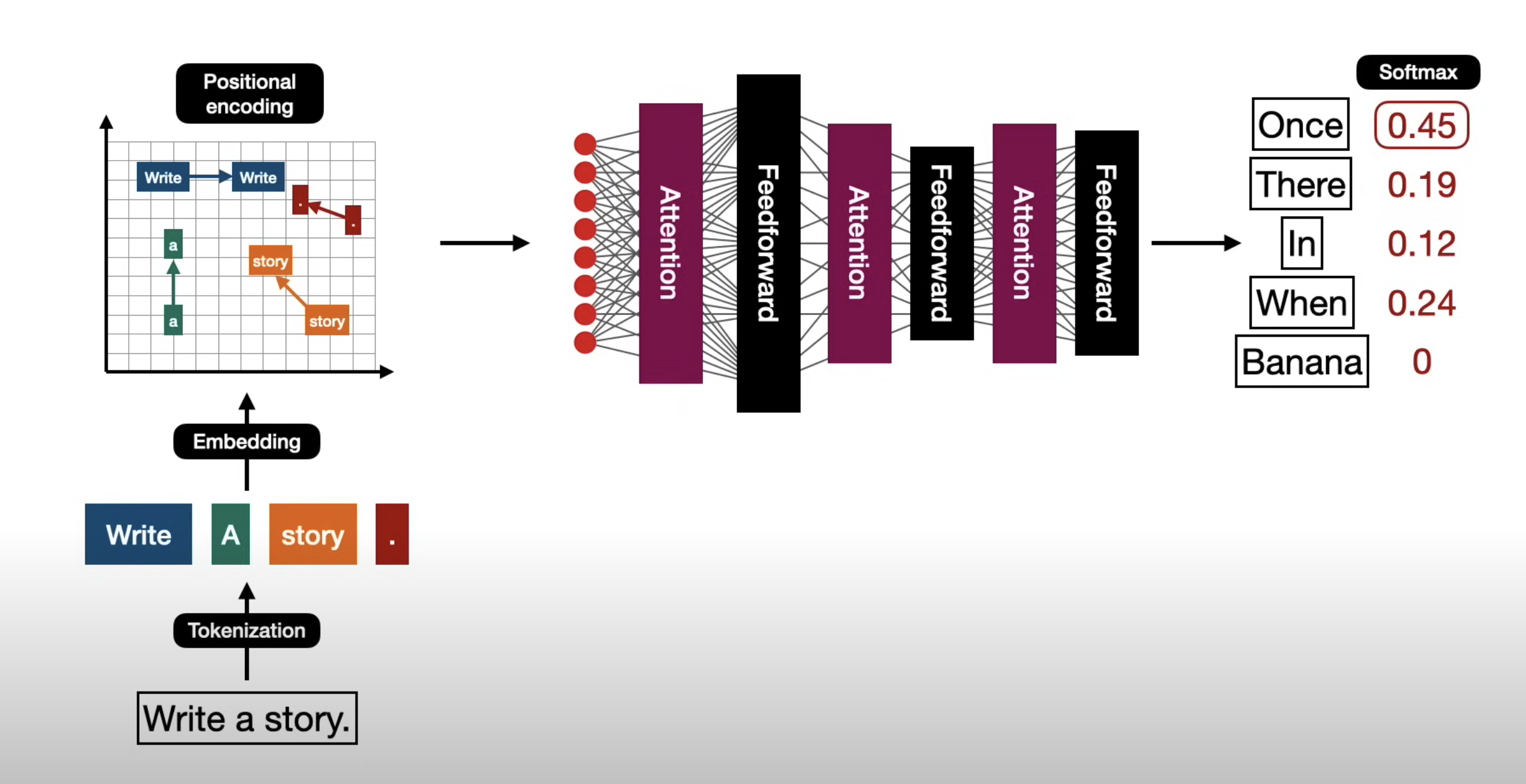

This sounds impossible before. But since the paper 《Attention is all you need》 was published in 2017, the transformer architecture was introduced to enable such contextual understanding possible by training a neural network on a very large dataset.

Model Architecture

Before the advent of the LLM, machine training on neural networks was indeed limited to using relatively small datasets and had minimal capacity for contextual understanding. This means that early models were not able to understand text in the same way that humans do.

When the paper was first published, it was intended for training language-translation purpose models. However, the team at OpenAI discovered that the transformer architecture was the crucial solution for character prediction. Once trained on the entirety of internet data, the model could potentially comprehend the context of any text and coherently complete any sentence, much like a human.

Below is a diagram showing what happens inside the model training process:

Of-course we don't understand that at the first time we see it, but don't worry we will soon walk through it in the following topics.

Before we delve into the programmatic aspects and mathematical details, let's proceed to understand the concept and process of precisely how the model operates.